How to Destroy The Myth of Cheap Wind and Solar

If Wind and Solar Are So Cheap, Why Do They Make Electricity So Expensive?

Have you ever wanted to destroy the arguments claiming that wind and solar are the cheapest forms of energy, but you weren’t sure how to do it? Fear not, dear reader; the Energy Bad Boys have you covered!

The Myth of Cheap Wind and Solar

Wind and solar advocates often cite a metric called the Levelized Cost of Energy (LCOE) to claim that these energy sources are cheaper than coal, natural gas, and nuclear power plants.1

However, these claims, which are already tenuous due to rising wind and solar costs, ignore virtually all of the hidden real-world costs associated with building and operating wind turbines and solar panels while also keeping the grid reliable, including:

Additional transmission expenses to connect wind and solar to the grid;

Additional costs associated with Green Plating the grid;

Additional property taxes because there is more property to tax;

“Load balancing costs,” which include the cost of backup generators and batteries;

Overbuilding and curtailment costs incurred when wind and solar are overbuilt to meet demand during periods of low wind and solar generation and are turned off during periods of higher output to avoid overloading the grid;

These comparisons also ignore the cost differential between low-cost, existing power plants and new power plants.

Add all of these factors together, and you have a recipe for soaring electricity prices due to the addition of new wind, solar, and battery storage on the electric grid.

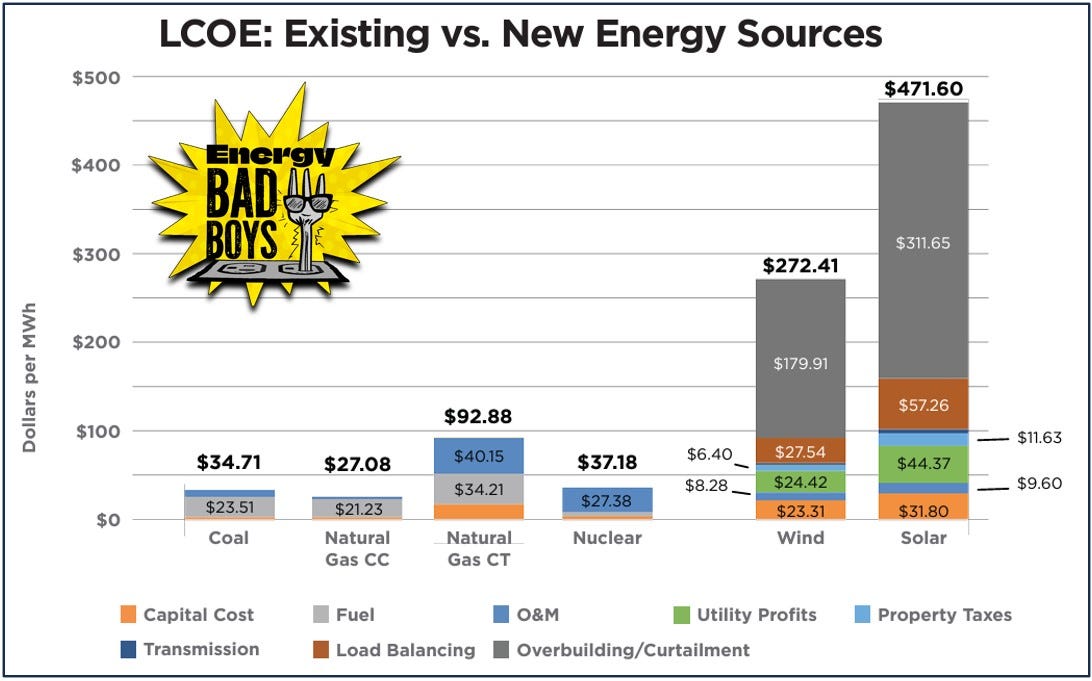

To remedy this situation, we developed a model to calculate the levelized cost of intermittency (LCOI), which is the additional costs borne by the entire electric system as ever-growing levels of intermittent wind and solar generation are incorporated onto the electric grid.

Our model attributes these additional costs to the wind and solar generators on a per megawatt-hour (MWh) basis to provide readers with an apples-to-apples comparison of the cost of providing reliable electricity service after accounting for the different attributes for dispatchable and non-dispatchable resources.

The graph below is from our study examining a 100 percent carbon-free electricity mandate in Minnesota, where 80 percent of the state’s energy is provided by wind, solar, or battery storage.

As you can see, relying on wind and solar to provide the bulk of electricity demand results in much higher costs compared to using the existing coal, natural gas, and nuclear power plants currently serving the grid.

How did the LCOE become so misinterpreted by renewable advocates? Because it stems from a time when, for the most part, only reliable power facilities were built on the grid, and it has been grandfathered into the present energy environment where less reliable, weather-based resources are now a major consideration. However, as we’ll explain below, LCOE estimates are no longer an appropriate metric for assessing system costs on the electric grid with these intermittent energy sources included.

Before we examine the hidden factors that make wind and solar so expensive, it helps to understand what the LCOE is, and its limitations.

What is the Levelized Cost of Energy?

The LCOE is a cost estimate that reflects the cost of generating electricity from different types of power plants on a per-unit-of-energy basis—generally megawatt hours (MWh)—over an assumed lifetime and quantity of electricity generated by the plant.

In this way, LCOE estimates are like calculating the cost of your car on a per-mile driven basis after accounting for expenses like your initial down payment, loan, insurance payments, fuel costs, and maintenance.

The main factors influencing the LCOE for power plants are the capital costs incurred for building the facility, financing costs, fuel costs, fuel efficiency, variable operational and maintenance (O&M) costs such as water consumption or pollution reduction compounds, fixed O&M costs such as routine labor and administrative expenses, the number of years the power plant is expected to be in service, divided by the amount of electricity the facility expected to generate during its useful lifetime.

If you want to learn more about these variables, you can check out this link.

Limitations of LCOE

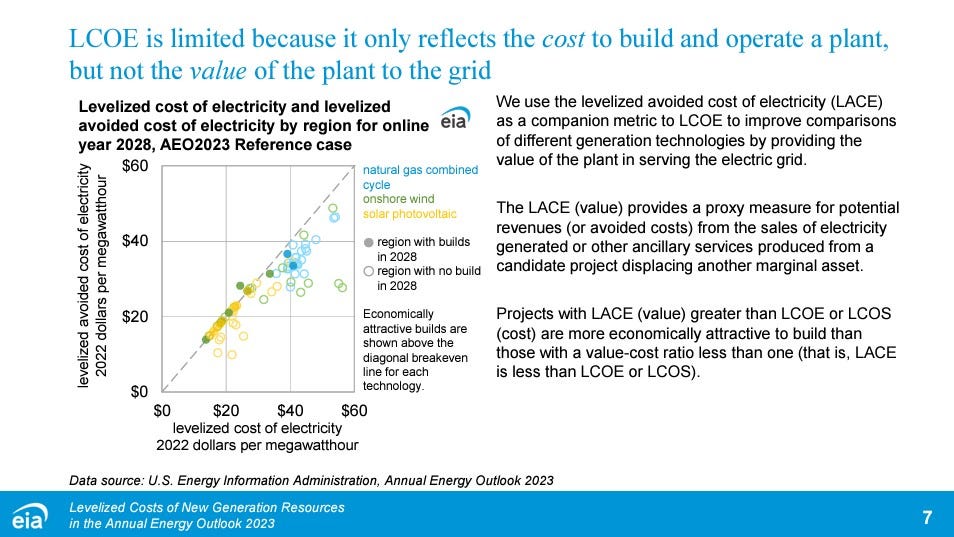

While the use of LCOE may have been appropriate in the past when most, if not all, newly proposed generating units were dispatchable, the introduction of intermittent renewable resources has made LCOE calculations less informative over time because this metric was developed to compare resources that were able to provide the same reliability value to the grid.

Because wind and solar are not able to supply reliable power on demand like dispatchable energy sources such as coal, natural gas, or nuclear power, it is not appropriate to compare LCOE estimates for dispatchable and non-dispatchable electricity sources because they are not an apples-to-apples comparison of value.

This is why the U.S. Energy Information Administration explicitly cautions readers against comparing the LCOE of dispatchable and non-dispatchable resources. Unfortunately, this word of caution is seldom heeded.

In other words, LCOE estimates do not reflect the system cost of utilizing each energy source on electrical grids - they are the cost incurred by the developers for each project (or the revenue needed for cost recovery). They do not cover the total cost incurred by energy consumers who pay not only for the facility and its production but also for transforming the grid to accommodate and provide backup for these energy sources.

Assessing the System Cost of Each Energy Source

The only true way to assess the system cost of each energy source is to model the entire grid using each technology at desired penetration levels—including each of the hidden costs mentioned above— and compare the costs of the new grid to a realistic baseline that includes continuing to utilize the existing system. This is especially true for non-dispatchable generators.

We flesh out the hidden cost drivers that we evaluate in our modeling to determine the true “All-In” LCOE of different resources below.

Transmission Costs

Transmission lines are important: It is pointless to generate electricity if it cannot be transported to the homes and businesses that rely upon it. Transmission costs will be much higher on an electric grid with high penetrations of wind and solar than on systems reliant upon dispatchable generators. This is because wind and solar are often located farther away from high-energy use areas and would require a large buildout of costly transmission capacity to move wind and solar power across the country depending on whether they’re producing electricity.

A report by the Energy Systems Integration Group examining six studies found that a reliable power system that depends on very high levels of renewable energy would be impossible to implement without doubling or tripling the size and scale of the nation’s transmission system.

Similar conclusions were drawn by Wood Mackenzie analysts, who estimated that achieving a 100 percent renewable grid would require doubling the nation’s 200,000 miles of high-voltage transmission lines at a cost of $700 billion.

Green Plating Costs

Most LCOE calculations include a utility return or capital cost into their calculations, but because these LCOE estimates don’t include the cost of transmission or other system costs, they don’t fully capture the amount of money that will be siphoned from ratepayers as profits for utility shareholders.

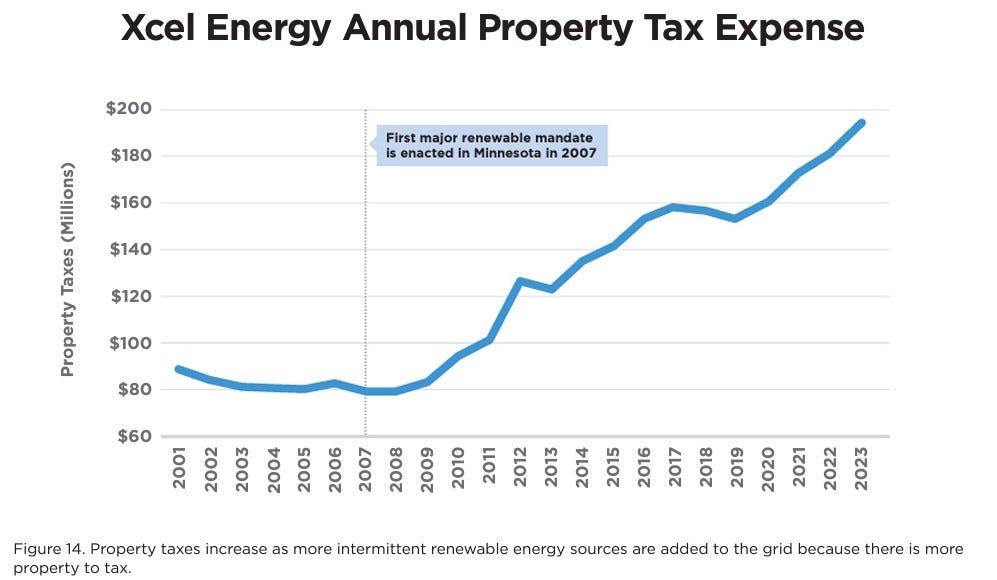

Property Taxes

Property taxes increase due to wind and solar buildouts because compared to operating the existing grids, there is much more property —wind turbines, solar panels, substations, and transmission lines— to tax.

While the property taxes assessed on power plants are often a crucial revenue stream for local communities that host power plants, these taxes also effectively increase the cost of producing and providing electricity for everyone.

For example, Xcel Energy saw its property taxes increase exponentially as it built wind, solar, and transmission facilities to satisfy Minnesota’s original renewable energy mandate, which was passed in 2007.

Load Balancing Costs

Attributing load balancing costs to wind and solar allows for a more equal comparison of the expenses incurred to meet electricity demand between non-dispatchable energy sources, which require a backup generation source to maintain reliability, and dispatchable energy sources like coal, natural gas, and nuclear facilities that do not require backup generation.

The key determinant of the load balancing cost is whether natural gas or battery storage is used as the “firming” resource. While natural gas provides relatively affordable firm capacity, battery storage is often prohibitively expensive.

We calculate load balancing costs by determining the total cost of building and operating new battery storage or natural gas facilities to meet electricity demand during the time horizon studied. In the case of our Minnesota report, we used battery storage due to the state’s carbon-free mandate.

These costs are then attributed to the LCOE values of wind and solar by dividing the cost of load balancing by the generation of new wind and solar facilities (capacity-weighted).

Overbuilding and Curtailment Costs

The cost of battery storage for meeting electricity demand is prohibitively high, so many wind and solar advocates argue that it is better to overbuild renewables, often by a factor of five to eight compared to the dispatchable thermal capacity on the grid, to meet peak demand during periods of low wind and solar output.

These intermittent resources would then be curtailed when wind and solar output improves. As wind and solar penetration increase, a greater portion of their output will be curtailed for each additional unit of capacity installed.

This “overbuilding” and curtailing vastly increases the amount of installed capacity needed on the grid to meet electricity demand during periods of low wind and solar output. The subsequent curtailment during periods of high wind and solar availability effectively lowers the capacity factor of all wind and solar facilities, which greatly increases the cost per MWh produced because, to use our car analogy from above, the cost of the car is being spread over fewer miles.

As you can see in the graph below from our Minnesota report, the more the grid tries to rely on wind and solar to meet demand, the more expensive the overbuilding and curtailment cost gets. Conversely, these costs are lower in a grid with more dispatchable generators.

Comparing New Renewables with Existing Resources

When wind and solar advocates claim that renewable energy sources are cheaper than coal, natural gas, and nuclear power, they usually compare the cost of building wind or solar to building new coal, natural gas, or nuclear plants.

However, these comparisons are missing the main point: we aren’t building a new electric grid from scratch, so we should be comparing the cost of new wind and solar with the cost of existing power plants that these intermittent generators would hope to replace. The truth is that we already have reliable, depreciated assets that produce electricity at low cost, and they could’ve kept doing so for decades.

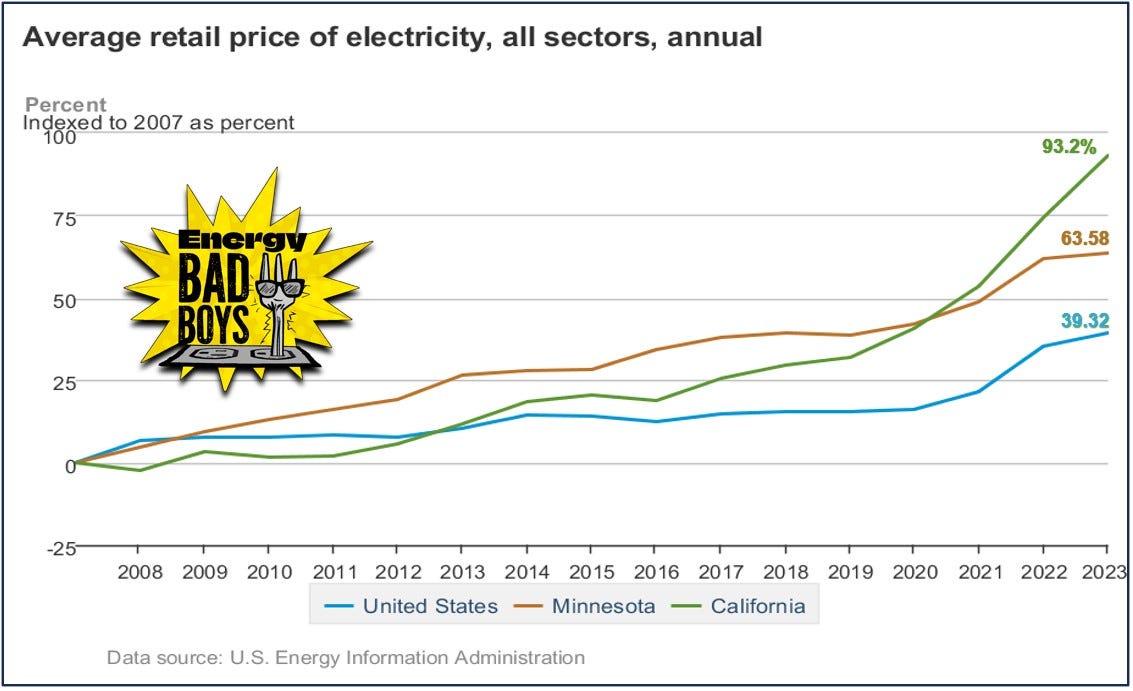

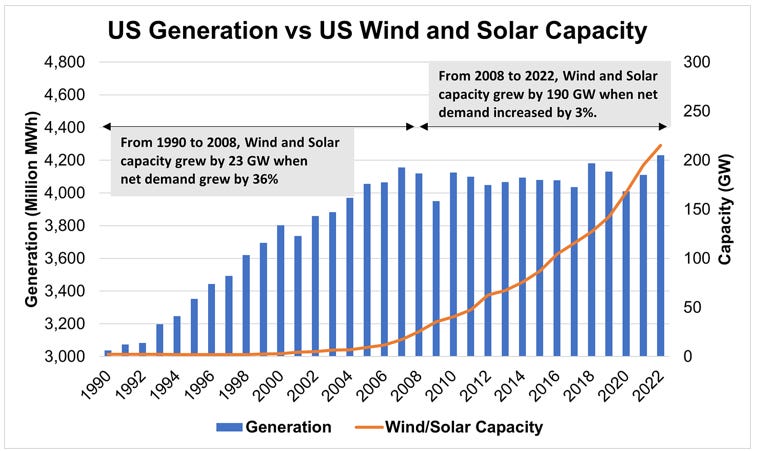

This means that building new wind and solar adds to the cost of providing electricity to the grid. If wind and solar were truly lower cost than other forms of energy, we would expect states like California and Minnesota, which have high penetrations of wind and solar, to see falling electricity costs. Instead, electricity prices in these states have increased much faster than the national average.

Keep in mind that wind and solar buildouts, which were driven by state mandates and not by the need for new energy sources to meet growing demand, were undertaken during a time of flat electricity demand, which spread more capital and hidden costs over the same number of megawatt hours. This is a major reason why the cost of electricity has soared in recent years, leaving families and businesses unable to realize significant cost savings from existing power facilities.

Conclusion

LCOE estimates can make wind and solar look cheap, so long as you ignore most of the costs of integrating them on to the grid and backing them up.

Comparing LCOEs makes sense when examining reliable, dispatchable power plants because these power plants can be turned on or off to meet electricity demand. It makes very little sense when you start including intermittent and weather-based energy sources that don’t provide the same value as thermal generators.

The intermittency of wind and solar imposes unique expenses on the electric grid that require an evaluation of the entire electric system in order to derive meaningful cost estimates from these generators. This is difficult to do, which is why most people don’t do it.

Our modeling attempts to provide this apples-to-apples comparison of running a reliable grid with dispatchable energy sources like coal, natural gas, and nuclear versus that of intermittent facilities like wind and solar. In every case, the answer is clear: wind and solar are by far the most expensive.

Like this piece to make Bill McKibben advocate angry.

Share it to make him even MORE angry.

Subscribe if you enjoyed this piece, and consider recommending us on your Substack to help us grow!

Profits Not Prices Drive Renewable Development, New Book Says from Bloomberg: Unless governments are willing to either assume the burden of renewables development through public ownership...they will have to keep subsidies and tax credits in place indefinitely or else renewables investment will collapse because of the unfavorable economics.

States shouldn’t have to pay for transmission driven by other states’ policies: FERC’s Christie from Utility Dive: Christie argues smart states should not need to subsidize the policies of less smart states.

These estimates are usually produced by Lazard, Bloomberg New Energy Finance, or the U.S. Energy Information Administration (EIA).

Energy Bad Boys hit a home run with this essay. Yet, being an engineer I must point out a couple of issues. First, I was unsure what the term "Green Plating" referred to. Yes, it is an amusing play on the older term "gold plating" which referred to running up the cost of a project. However, this is a confusing point for ratepayers. Many people are very skeptical of the utility argument that wind and solar have no fuel cost thus must be the lowest cost alternative because their power bills are rising fast, yet can't quite refute the utility's logic about fuel cost. We need to rectify this very clearly.

One approach to crystallizing the argument is to point to empirical data that show rising costs as a function of renewables penetration. The Concerned Household Electricity Consumers Council (CHECC) in their lawsuit against the EPA is taking this route. However, there is quite a bit of noise in the empirical data which gives opponents a means to cloud the issue. Recognizing this flaw, I wrote a contribution for What's Up With That (WUWT) last autumn that deconstructed the utility rate setting process to show, specifically, why rates will rise with wind/solar (https://wattsupwiththat.com/2023/11/05/setting-utility-rates/).

What you fellows call Green Plating is what specifically amounts to return on capital -- it is "paying for access to capital markets". Wind/solar add to the utility's rate base, and then will cost the rate payers in aggregate the rate base addition times the allowed return on rate base. So far, this appears no different than it would with thermal assets. However, because thermal assets that once were adequate to run the grid on their own are now being used to balance wind/solar intermittently, the addition of wind/solar lowers the capacity factor of other assets on the grid what results is higher rate of return cost per unit of delivered energy which cannot do anything but raise rates.

Poor utilization of capital dominates, and I mean dominates, the entire energy evolution. You guys mention needed additions to long-haul transmission lines. These are needed for relatively low probability failures of local sources of total energy (an issue of renewables) -- i.e. they represent a poor capacity factor and poor utilization of capital. The worst problem with all of this is cost of storage. Using just 2023 data, I estimated that rate base in our balancing authority area will rise from its current $17,000 per ratepayer to well over $100,000 and perhaps to twice that per ratepayer in order to maintain constant reliability.

Think of the opportunity costs that this poor utilization of capital represents.

BTW, thanks for the reference to Mark Christie's commentary at the FERC. I come here to learn and I am never disappointed.

The first chart tells it all, although the final one showing wind/solar's rise vs. flat electricity production in combination with the rising costs of electricity in Minnesota is also quite informative. great article, thanks